As we’ve seen in our previous article, most IoT products do not embed the same computing power and storage capacity than our computers or servers. However, with some caution, it seems possible to integrate more and more intelligence into those objects.

Is it enough to implement machine learning algorithms? If so, how to do that and still keep the embedded software reliable and predictable? This is the purpose of this article.

3 – “Machine learning”: what is(n’t) it?

Before going into the details of how machine learning works, let’s first have a look at what machine is NOT (or does not have to be):

3.1 No self-learning

The device won’t have to build by itself new algorithms able to detect some events that are initially unknown. The idea that “by collecting millions of data samples over time, the product could end up recognizing some events previously encountered” and “by using some other criteria it may understand what this event means” is way beyond what we are talking about here.

Quite the opposite, the detection algorithms will be defined before being implemented in the product. That is called the learning phase.

3.2 The learning phase is not done within the final product

One should avoid the confusion between this learning phase, that can be performed at a R&D stage even before the product development starts, and the use in a product of the algorithms found during this learning phase.

In the overall process, this learning phase is the most resource-consuming, by the amount of data to be analyzed and by the computing power required to build all the potential algorithms. So it does not have to be performed on the final product but rather on a dedicated powerful PC or server.

The output of this phase will be an algorithm able to detect different events, and only this algorithm will have to be implemented into the final product.

3.3 No creativity

The machine learning software that we are going to use for the learning phase will not create the different events that we would like to detect. We will have to give it, as an input, classified data. That means that for each data sample we will have to indicate which event this sample corresponds to.

3.4 How machine learning works

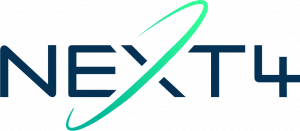

Machine learning’s process takes place through 3 steps:

- data classification: all the recorded data samples have to be, sometimes manually, linked to one of the events that we want to detect.

- learning phase: based on this classified data, a software (running on a PC or server) will try to find the best criteria and algorithms to detect the events. Usually, a large part of the data is used to find the algorithms and the remaining part is used to test their reliability and performance.

- implementation du of the best algorithm into the final product.

4 – How to adapt machine learning process to our constraints??

In the IoT world, there are plenty of products that have quite the same computing power or storage capacity as a computer. But they usually aren’t “low-power”, and are either power-plugged (as a connected camera for instance) or their battery has to be recharged very often (as a GPS watch for instance).

We are looking here at low-power, long-battery-life and ultra-limited-resources products. The following guidelines aim to adapt the machine learning process for those constraints.

4.1 Choosing the right tools

Before starting, we have to choose the softwares that we are going to use. Some suit better than others for specific targets.

The final product’s microprocessor (or more likely microcontroller) manufacturer may already have machine learning demos or examples: re-using the same tools may be a good starting point.

4.2 No float!

It is a #1 rule in embedded software development: avoid the computing on floating variables (aka the “decimal numbers”)!

Most of the softwares (if not all of them) do not take these into account. However it is possible to convert floating variables into integers, aka whole numbers, (for instance, rather than having a 1.589g acceleration we can consider a 1589mg acceleration) and adapt the calculation and formulas to keep a satisfactory accuracy on those integers.

4.3 Choosing the right metrics

Most of the time, event detection won’t be based on the raw data itself but rather on data variation profiles. So it will be necessary to pre-compute some kind of statistics on the raw data. Those are called metrics.

The algorithm found during the learning process will be based on those metrics. Thus, it will be required to compute those metrics in the final product in order to implement the algorithm.

Therefore it is a good idea to start with simple (easy-to-compute) metrics such as average, peak-to-peak, min, max, etc… and consider more complex ones (variance, standard deviation, etc…) only if the first ones do not provide good results.

4.4 Choosing the right time window

The data and its metrics will be analyzed on a given period of time (for instance “the average acceleration over 10 secondes”). The longer this period is, the more memory space will be required to store the data and the more resource-consuming the metric computation will be.

So we will take care of keeping this period as short as possible, or implement intermediate computations if that is not possible.

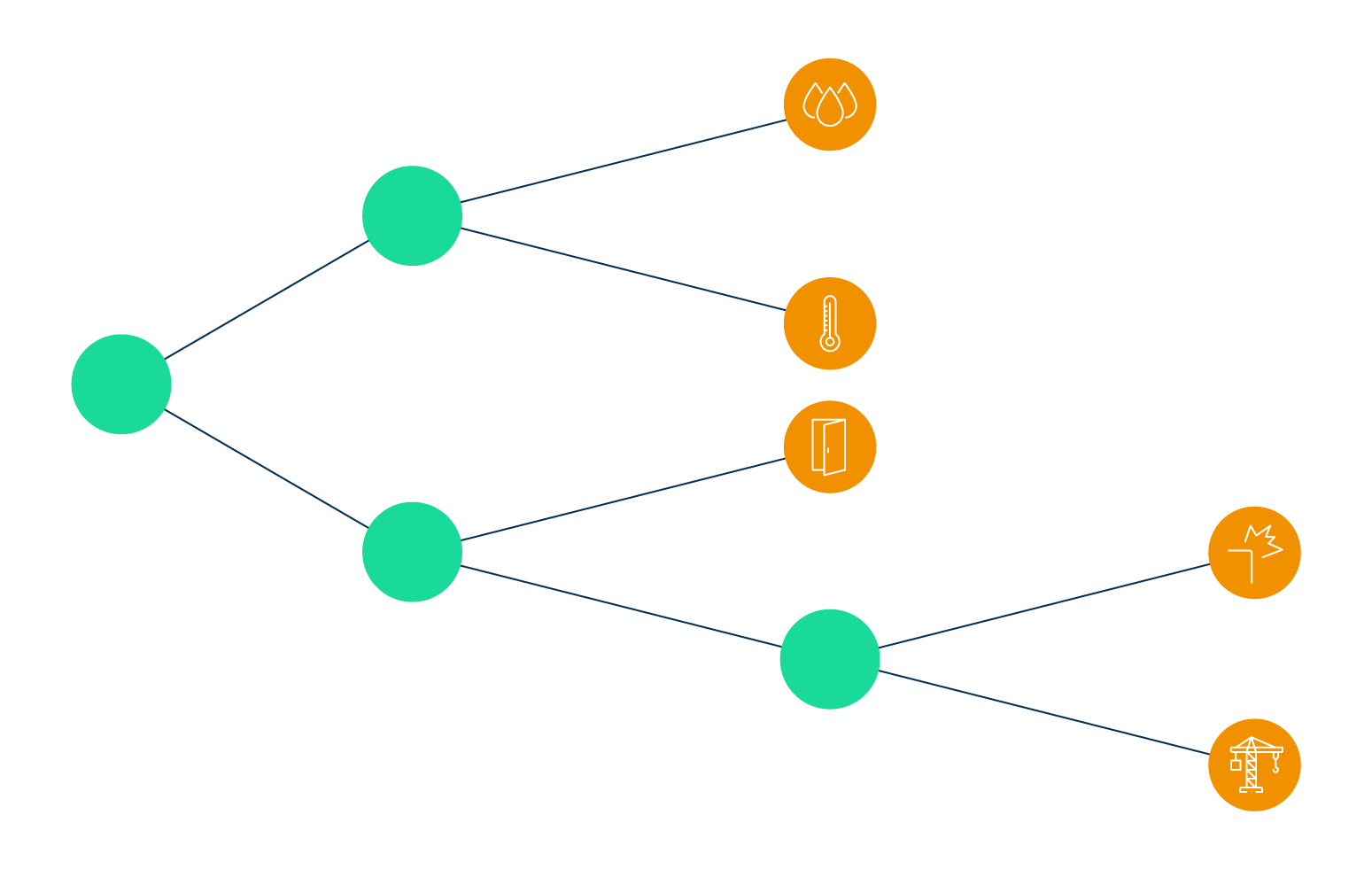

4.5 Choosing the right type of algorithm

The learning software can try different kind of algorithms. Here again, we will start by testing the simplest ones (decision trees for instance) before considering more complex ones if necessary.

This also allows better “understanding” of the algorithm and mastering the system behavior (its reliability and predictability), and even manually tuning the algorithm later on (although this is usually not recommended in a machine learning process).

4.6 Assess the performance of the resulting algorithm

Using a small portion of the input data as a test sample, the learning software will provide a performance ratio for each potential algorithm. Obviously the goal is to find and use the algorithm whose ratio is the closest to 100%.

However, each ratio should be looked at against the algorithm (and corresponding metrics) complexity. Could we accept a lower success rate if that guarantees a more reliable system and a better resource utilization?

Another lead to follow: Wouldn’t it be possible to improve the success rate of a simpler algorithm? A better data classification to start with, or more input data can make much more difference than changing the metrics or the algorithm type.

At Next4, following those basic guidelines, we were able to implement a crane lift up detection algorithm in our shipping container tracker, without being limited by its microcontroller resources.

What other difficulties did we face? What added-value does it bring in comparison to “empirical” algorithms? What other possibilities do we foresee? That will be the topic of the last article of this series.

by Julien Brongniart